Nvidia's Custom Chip Play is a new Moat for AI Chip Leadership

Let's try to think about this for a moment.

Hey Everyone,

I wrote this for another publication this morning, and tried to restack it to this Newsletter, but was unable to do so, so here it is since it’s related to our thesis about how well Nvidia anticipates, pivots and keeps building moats in its A.I. chip leadership.

The chip giant’s shares have surged 46% this year and added about $560 billion in market value as of Monday, when they closed at a record around $722. The price of the stock is currently $716.

What’s amusing is Nvidia’s positioning too relative to OpenAI. Nvidia's CEO questioned Sam Altman's reported attempts to raise up to $7 trillion to boost GPU chip supply. Jensen Huang told the World Governments Summit in Dubai that the cost would be far lower. He said building AI data centres globally would amount to just $2 trillion over the next five years.

As Nvidia’s is in a pivotal leadership role in the future of A.I. chips and datacenters, I find listening to Jensen Huang is getting far more interesting in recent months and years.

He also suggested that the cost of building AI data centers globally would amount to $2 trillion by 2029. This contradicts Sam Altman’s bizarre report that he is seeking up to 7 Trillion in funding for a side project on a network of AI chip and GPU factories.

In early February Jensen Huang said countries around the world aiming to build and run their own artificial intelligence infrastructure at home will drive up demand for his company’s products. Nations including India, Japan, France and Canada are talking about the importance of investing in “sovereign AI capabilities,” Huang said in an interview with Bloomberg.

In the past five years, Nvidia's revenue has skyrocketed 147%, so things are going in a very positive direction to the he supply-demand for A.I. chips globally with the rise of Generative AI and new kinds of models and products, including in the Enterprise space.

Nvidia's revenue growth has been driven by strong demand for its graphics processing units (GPUs) in the gaming, data center, artificial intelligence, and automotive markets. Revenue is seen rising about 120% over its 2024 fiscal year, with another 60% growth expected next year. The centrality of processing chips to AI, along with Nvidia's perceived technical superiority, has many convinced that the growth it is seeing is both durable and long-term in nature.

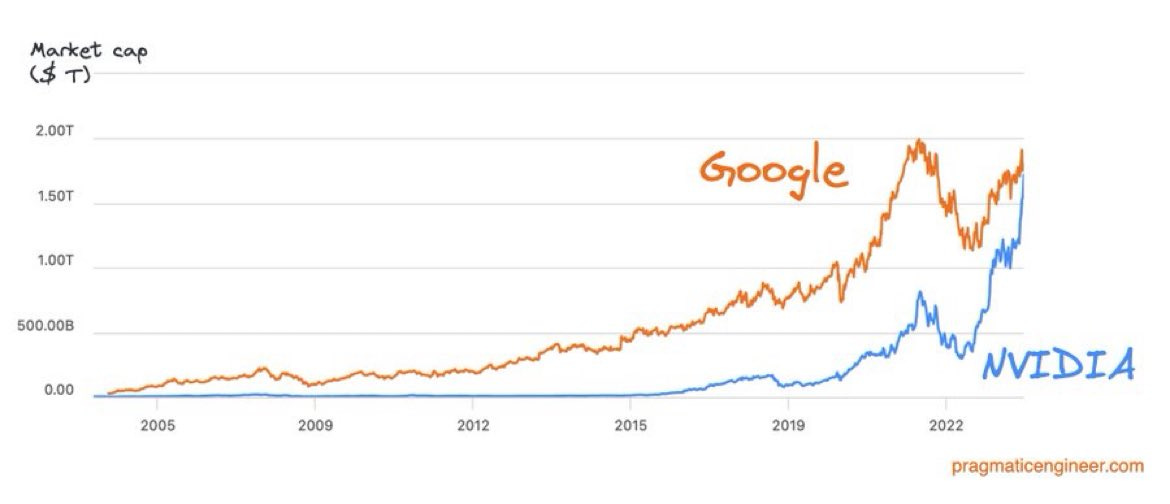

As Nvidia overtook both Amazon and Google in market cap in mid February, 2024 (even if temporarily and for the first time), it has a lot of other exciting plans. Nvidia—which roughly translates to “envy” in Latin—has been having its AI moment.

This graph should have Amazon too, but it’s just to illustrate how hot the AI chips and semiconductor space is.

Nvidia’s H100s are the stuff of legends for LLMs and AI, but what about custom chips? Nvidia is building a new business unit focused on designing bespoke chips for cloud computing firms and others, including advanced artificial intelligence (AI) processors, nine sources familiar with its plans told Reuters.

Datacentre custom chip market could swell to $10 billion this year and potentially double by 2025