Hey Everyone,

Llama 3.1 405B is the latest and most advanced large language model (LLM) released by Meta on July 23, 2024.

That a BigTech players that have invested as much as Meta has in AI chips is going for Open-source is fairly interesting as a strategy. How good is Meta’s AI? It’s official, Meta can do a significant launch for the future of open-source AI. Let’s break this down.

How about Mark Zuckerberg in his own words? (credit: see full interview here by The Rundown).

Do you support Open-Source AI?

You can also watch the interview on YouTube, if you prefer.

TIMESTAMPS

00:00 Intro

00:38 Meta’s Llama 3.1 rundown

03:44 Real-world use cases for Llama 3.1

06:15 Educating developers on open-source AI tools

09:43 Societal implications of open-source AI

13:00 Balancing power and managing bad actors

14:40 Open source and global competition

16:59 Accelerating innovation and economic growth

20:04 Zuck on Apple and lessons from the past

24:22 Future of AI: Llama 3 and beyond

26:43 Prediction: Billions of personalized AI agents

31:32 Factors to changing anti-AI sentiment

“Llama 3.1 is free, open, and on par with the best proprietary systems. To maximize performance, safety, customizability, and efficiency, AI platforms must be open, just like the software infrastructure of the Internet became open.”

Also:

How Does this Impact OpenAI’s closed-model Moat?

Is Meta is drying up all frontier AI labs' moats? Serious question.

And go deeper:

Meta says Llama 3.1 is available to download now from Meta or Hugging Face + >25 partners rolling out the models across their platforms.

https://huggingface.co/meta-llama/Meta-Llama-3.1-405B

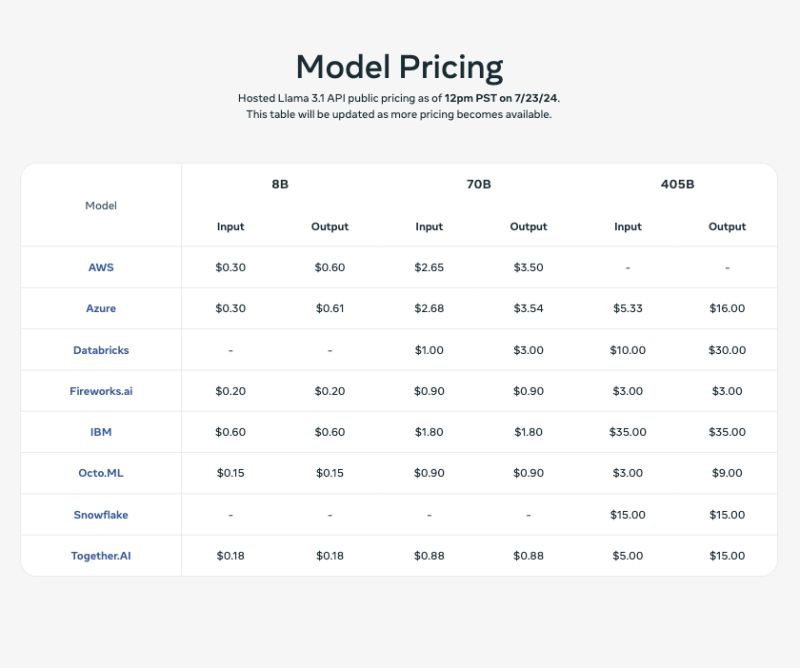

Pricing:

Big Moment in Open-Source AI

🟢 Comparable to GPT-4o and Claude 3.5 Sonnet, according to the benchmarks

🟣 The weights are publicly available

🔴128k context even on the smaller models

This could be the biggest 2024 Open-source release of 2024 in some respects.

Advanced Features:

You can fine-tune, distill and deploy it anywhere.

Supports multiple languages & tools, with performance on par with GPT-4o.

Optimized to run on a single server using cutting-edge quantization techniques.

Advanced data preprocessing & quality assurance methods implemented in Llama 3.1 405B.

Integrating image, video, & voice capabilities—still under development.

Open-source & updated licensing to foster tech sharing and applications.

“405B model is open (this) will make it among the best choice for fine-tuning and distilling smaller models.”

Mark Zuckerberg expects Meta’s AI assistant to surpass ChatGPT’s usage in the coming months, which sounds fairly far fetched.

Benmarks

Notable changes from Llama 3:

MMLU:

8B: 68.4 to 73.0

70B: 82.0 to 86.0

HumanEval:

8B: 62.2 to 72.6

70B 81.7 to 80.5

GSM8K:

8B: 79.6 to 84.5

70B: 93.0 to 94.8

MATH:

8B: 30.0 to 51.9

70B: 50.4 to 68.0

Also see:

Meta’s Evolution in Open-Source Model Performance is Getting Impressive

Llama 3.1 405B is a dense Transformer with 405B parameters and a context window of up to 128K tokens.

They claim the model is competitive with leading foundation models across a range of tasks, including GPT-4, GPT-4o, and Claude 3.5 Sonnet.

Partners Starting Guide

Meta publicly release Llama 3, including pre-trained and post-trained versions of the 405B parameter language model and our Llama Guard 3 model for input and output safety.

As noted by the Verge, Meta isn’t disclosing the cost of developing Llama 3.1, but based on the cost of the Nvidia chips alone, it’s safe to guess it was hundreds of millions of dollars.

Training the Model

Like has been mentioned, the model training to over 16 thousand H100 GPUs, making the 405B the first Llama model trained at this scale.

“Llama needs to develop into a full ecosystem of tools, efficiency improvements, silicon optimizations, and other integrations. If we were the only company using Llama, this ecosystem wouldn’t develop and we’d fare no better than the closed variants of Unix.”

Meta says that compared to previous versions of Llama, they improved both the quantity and quality of the data they use for pre- and post-training. These improvements include the development of more careful pre-processing and curation pipelines for pre-training data, the development of more rigorous quality assurance, and filtering approaches for post-training data.

The eight languages it has support for on first release are English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

The model is particularly effective for generating synthetic data to improve smaller models and for knowledge distillation to transfer knowledge to smaller models from the 405B model.

In a letter published on Meta’s company blog, Zuckerberg argues that open-source AI models will overtake — and are already improving faster than — proprietary models, similar to how Linux became the open-source operating system that powers most phones, servers, and gadgets today.

It’s not clear exactly what Llama’s ecosystem could turn into. But Facebook (now called Meta) is swinging pretty hard in terms of investment with this. As many BigTech companies possibly over-invest in Generative AI broadly speaking in comparison to the short-term revenue they will derive from it.

“An inflection point in the industry where most developers begin to primarily use open source”

Meta is working with more than two dozen companies, including Microsoft, Amazon, Google, Nvidia, Snowflake and Databricks, to help developers deploy their own versions.

Unlike closed models, Llama model weights are available to download.

Manny researchers believe Meta is AI-washing and that its models are not really open-source, technically speaking. More just like Open-weight models.

Read more here.

Unsurprisingly, Meta isn’t saying much about the data it used to train Llama 3.1. So how can it be really open? Which is the crux of the matter really.

Open weight does not equal Open-source, only a variation of it on a spectrum.

Where will all of this lead to?

"Llama models were always intended to work as part of an overall system that can orchestrate several components, including calling external tools," the social network giant wrote. "Our vision is to go beyond the foundation models to give developers access to a broader system that gives them the flexibility to design and create custom offerings that align with their vision."

Open-source models will eventually usher in an era of Agentic AI. While that has already started, it might take until 2030 for us to see things that truly work well on an Enterprise level.

Meta’s own implementation of Llama is its AI assistant, which is positioned as a general-purpose chatbot like ChatGPT and can be found in just about every part of Instagram, Facebook, and WhatsApp, although it’s not clear to me if adoption is good, and I doubt it thus far.

A commercial license from Meta is only required for companies with more than 700 million users.

An Ecosystem Advantage?

The Llama Stack will eventually form a series of standardized interfaces that define how toolchain components — for example, fine-tuning or synthetic data generation — or agentic applications should be built. Meta's hope is that by crowdsourcing these efforts such interfaces will become the industry standard.

Meta claims Llama 3.1 405B is in a class of its own, with unmatched flexibility, control, and state-of-the-art capabilities that rival the best closed source models. But for how long? That’s always the question in the race for AI Supremacy.